Replacing freshman comp with dozens of small groups run like graduate seminars is expensive and hard to imagine. But it would create a generation of students who wouldn't use an AI to write their essays any more than they'd ask an AI to eat a delicious pizza for them. We should aspire to assign the kinds of essays that change the lives of the students who write them, and to teach students to write that kind of essay.

Activity tagged "artificial intelligence"

"it isn't just X—it's Y" is by far the most annoying ChatGPTism

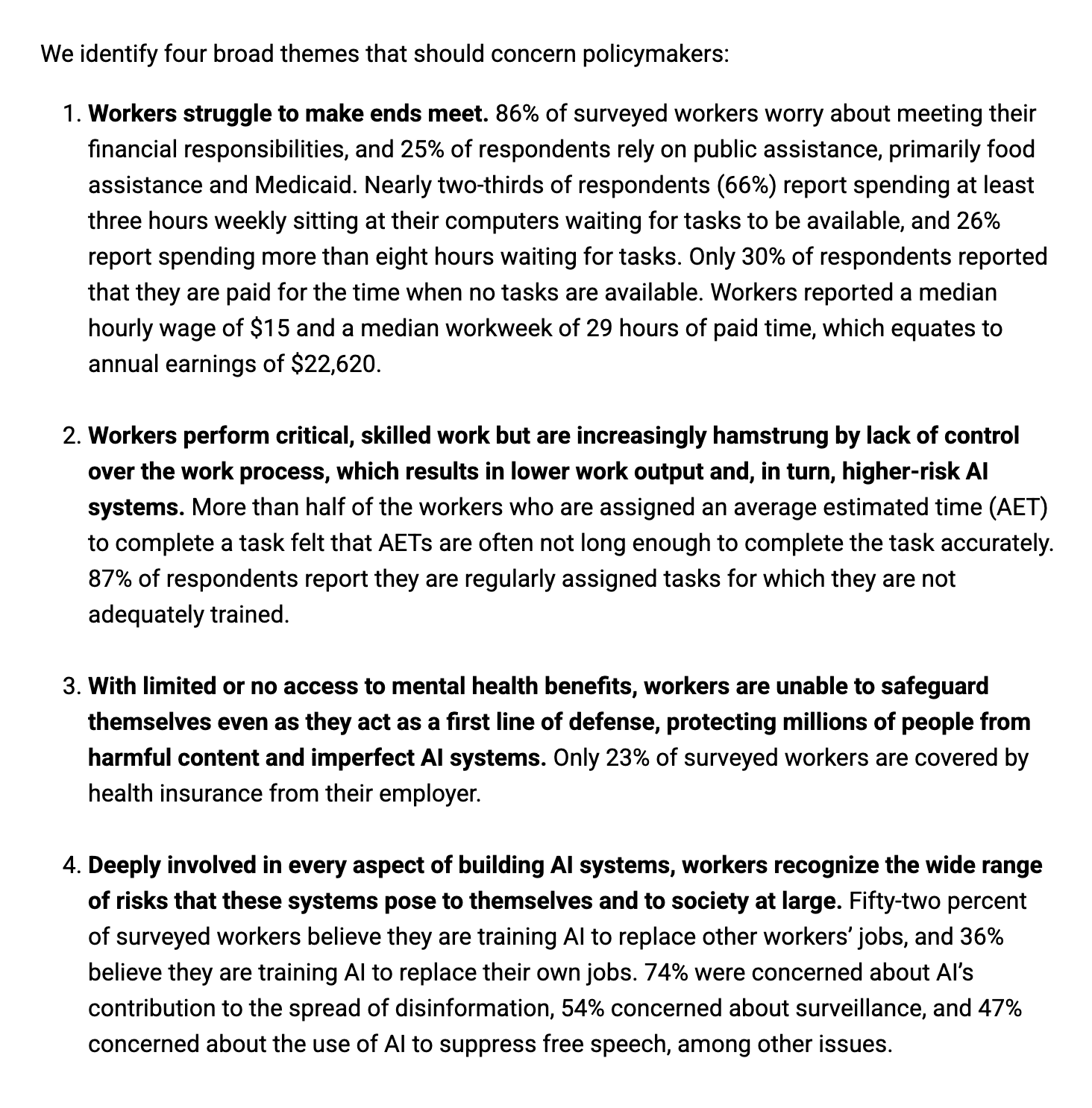

A podcast discussion with the AI Now Institute's Sarah Myers West, Data and Society's Maia Woluchem, and Athena for All's Ryan Gerety.

After the failure of S.B. 1047, new AI disclosure law drops kill switch for disclosure mandate.

Someone should probably inform the White House's "AI & Crypto Czar" that no one is forcing AI companies to train their models on Wikipedia

You would think the obvious solution to "the volunteer-powered project we all train our AI models on for free isn't adequately twisting reality to our political views" would be "... and so we stopped training on it" and not "... and so we will force the volunteers to bend to our will"

too many people are doing a great disservice to their writing by garnishing it with generative-ai (artificial intelligence) - ethics and values aside (lol), it looks tacky and it cheapens the words around it. there are so many human-created, realistic, and beautiful images available for you to use on your blogs, websites and projects for free. the following is a list that i believe just scratches the surface of what's available out there.