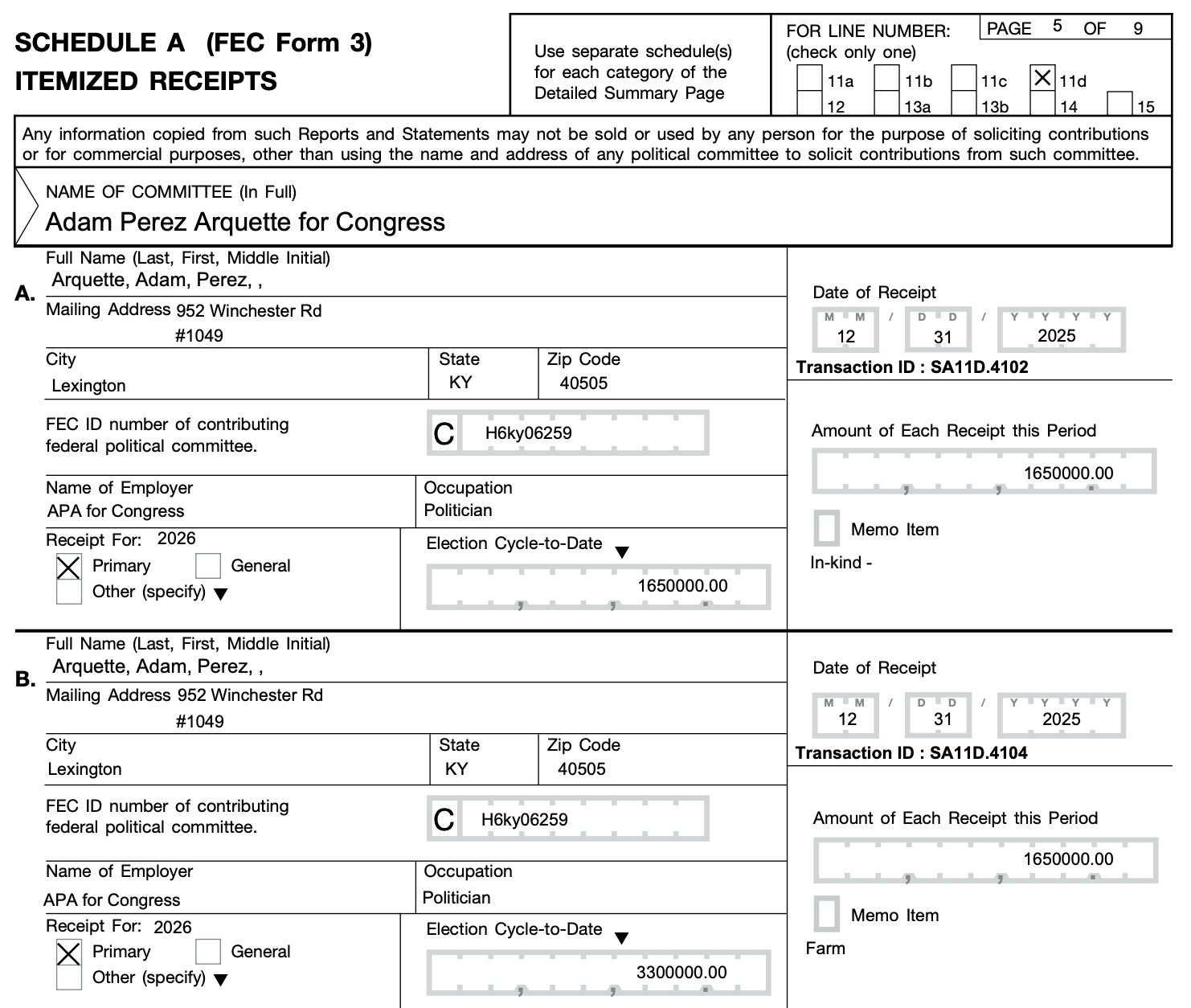

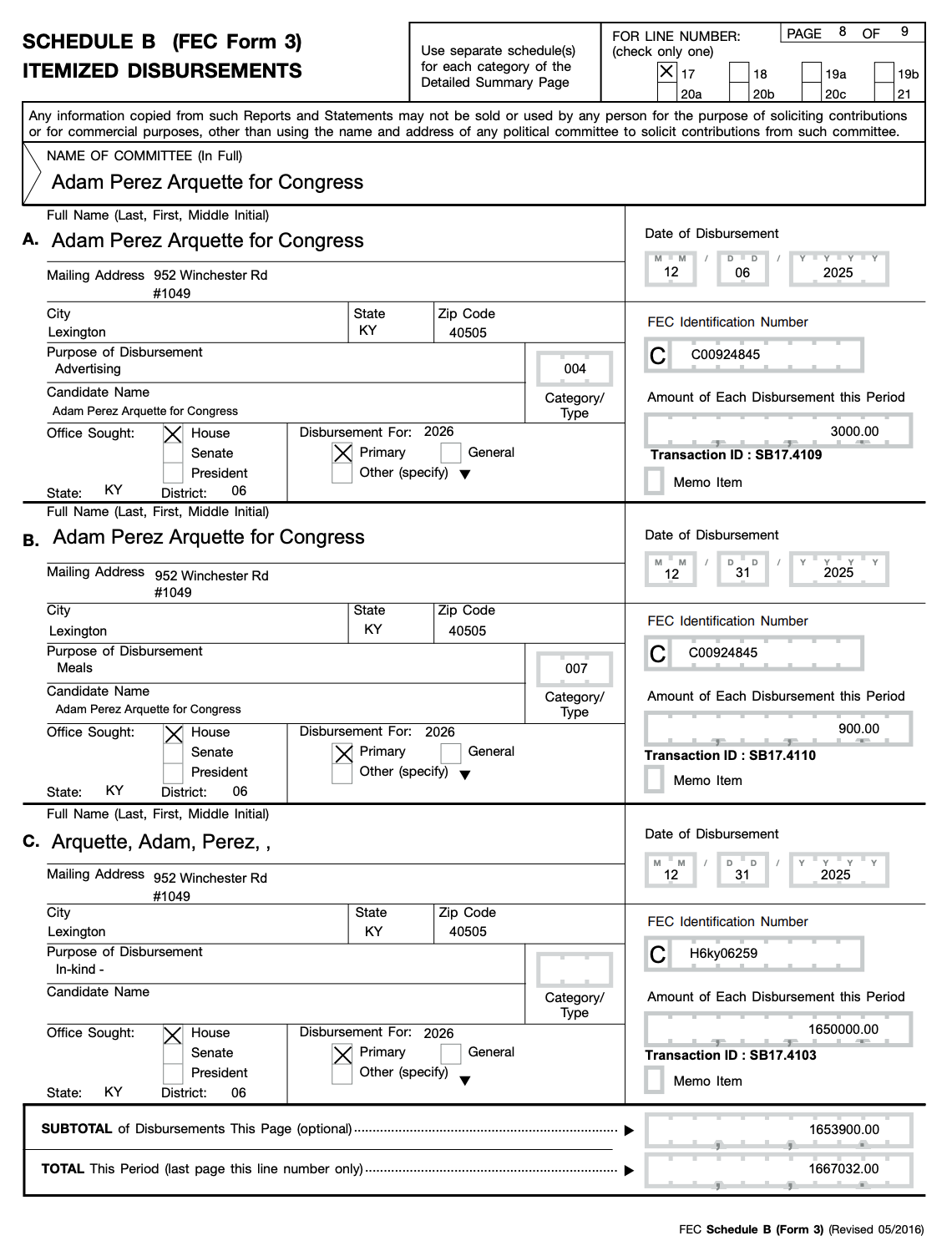

Obscure candidate hack: self-fund your campaign with $3.3 million and become the “top fundraiser” in your race, then just cut yourself a check for $1.67 million back

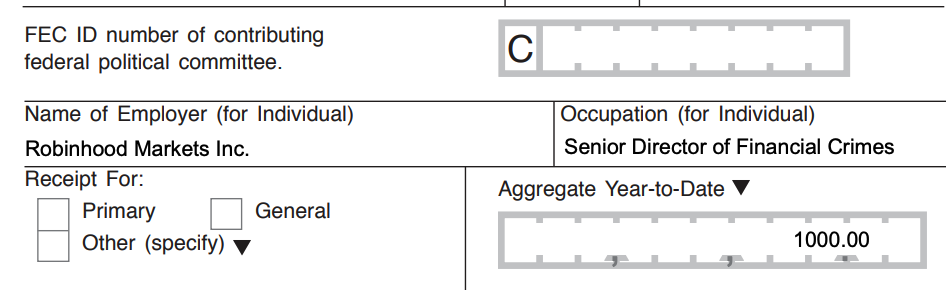

It’s hard to find much about the guy but he describes himself as a former member of the intelligence community, wheat farmer, and restaurant worker who went to Trump University and “built a successful real estate portfolio using the tools provide [sic] by the Trump Organization”. He’s pledged to “get rid of the D.I.R.T.: Drugs, Illegals, Regulations, Taxes”.