.

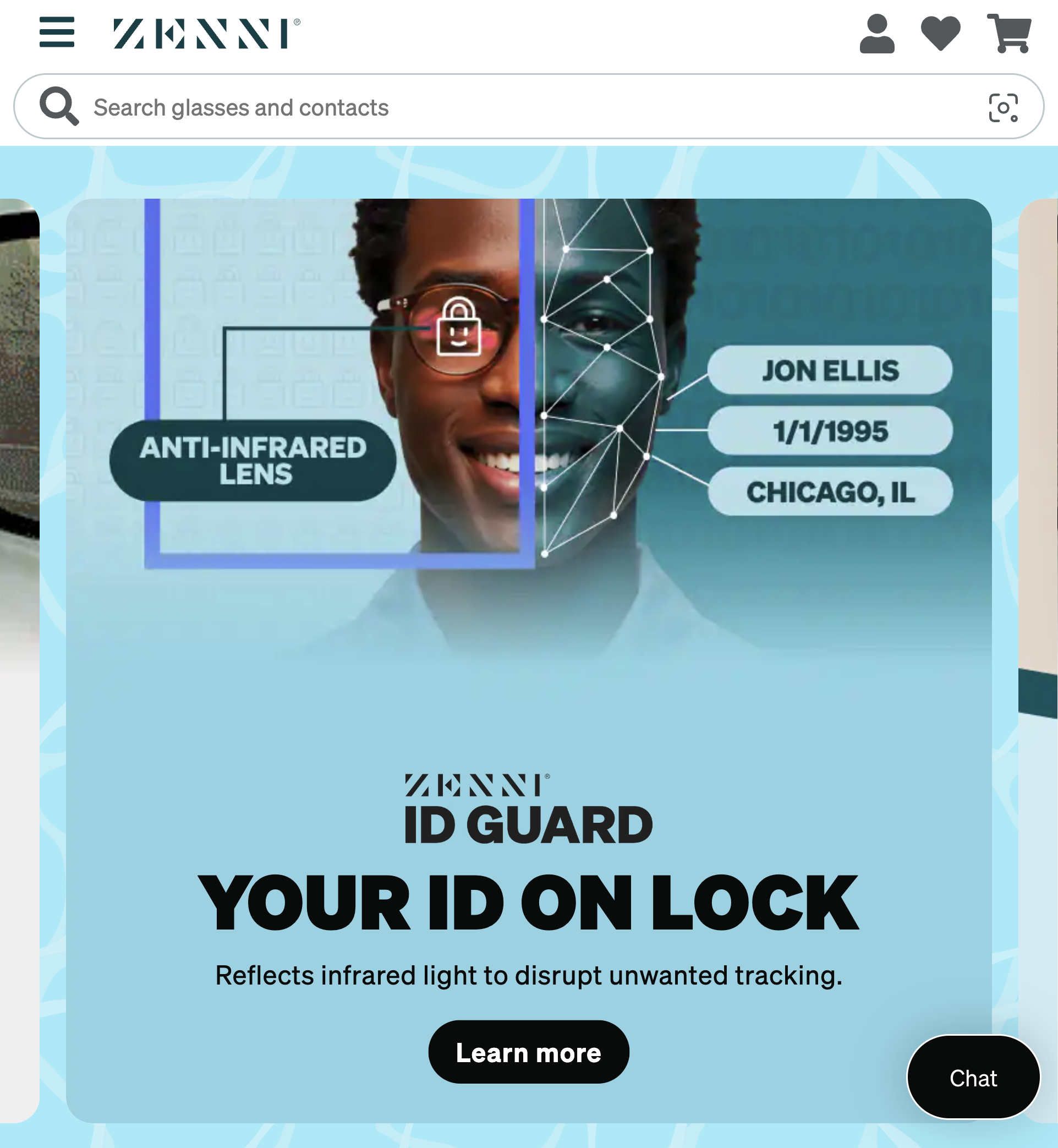

We identify four broad themes that should concern policymakers: Workers struggle to make ends meet. Workers perform critical, skilled work but are increasingly hamstrung by lack of control over the work process, which results in lower work output and, in turn, higher-risk AI systems. With limited or no access to mental health benefits, workers are unable to safeguard themselves even as they act as a first line of defense, protecting millions of people from harmful content and imperfect AI systems. Deeply involved in every aspect of building AI systems, workers recognize the wide range of risks that these systems pose to themselves and to society at large.